2.3.9.2. Content¶

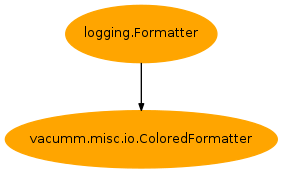

| Inheritance diagram: | |

|---|---|

In/Output tools

-

class

ColoredFormatter(msg, full_line=False)[source]¶ Bases:

logging.FormatterLog formatter with colors

-

converter()¶ - localtime([seconds]) -> (tm_year,tm_mon,tm_mday,tm_hour,tm_min,

- tm_sec,tm_wday,tm_yday,tm_isdst)

Convert seconds since the Epoch to a time tuple expressing local time. When ‘seconds’ is not passed in, convert the current time instead.

-

format(record)[source]¶ Format the specified record as text.

The record’s attribute dictionary is used as the operand to a string formatting operation which yields the returned string. Before formatting the dictionary, a couple of preparatory steps are carried out. The message attribute of the record is computed using LogRecord.getMessage(). If the formatting string uses the time (as determined by a call to usesTime(), formatTime() is called to format the event time. If there is exception information, it is formatted using formatException() and appended to the message.

-

formatException(ei)¶ Format and return the specified exception information as a string.

This default implementation just uses traceback.print_exception()

-

formatTime(record, datefmt=None)¶ Return the creation time of the specified LogRecord as formatted text.

This method should be called from format() by a formatter which wants to make use of a formatted time. This method can be overridden in formatters to provide for any specific requirement, but the basic behaviour is as follows: if datefmt (a string) is specified, it is used with time.strftime() to format the creation time of the record. Otherwise, the ISO8601 format is used. The resulting string is returned. This function uses a user-configurable function to convert the creation time to a tuple. By default, time.localtime() is used; to change this for a particular formatter instance, set the ‘converter’ attribute to a function with the same signature as time.localtime() or time.gmtime(). To change it for all formatters, for example if you want all logging times to be shown in GMT, set the ‘converter’ attribute in the Formatter class.

-

usesTime()¶ Check if the format uses the creation time of the record.

-

-

class

Logger(name, logfile=None, console=True, maxlogsize=0, maxbackup=0, cfmt='%(name)s [%(levelname)-8s] %(message)s', ffmt='%(asctime)s: %(name)s [%(levelname)-8s] %(message)s', asctime='%Y-%m-%d %H:%M', level='debug', colors=True, full_line=False, redirect_warnings=False, redirect_stdout=False, redirect_stderr=False)[source]¶ Bases:

objectClass for logging facilities when subclassing. Logging may be sent to the console and/or a log file

Params: - name: Name of the logger.

- logfile, optional: Log file.

- console, optional: Log to the console.

- maxlogsize, optional: Maximal size of log file before rotating it.

- maxbackup, optional: Maximal number of rotated files.

- sfmt, optional: Format of log messages in log file.

- cfmt, optional: Format of log message in console.

- asctime, optional: Time format.

- level, optional: Initialize logging level (see

set_loglevel()). - colors, optional: Use colors when formatting terminal messages?

- full_line, optional: Colorize full line or just level name?

- redirect_warnings, optional: Redirect messages issued by

warnings.warn. - redirect_stdout, optional: Redirect messages issued to sys.stdout.

- redirect_stderr, optional: Redirect messages issued to sys.stderr.

See also: loggingmodule

-

class

NcFileObj(ncfile, mode='r')[source]¶ Bases:

objectSimple class to properly manage file object or name

Examples: >>> nfo = NcFileObj('myfile.nc') >>> nfo.f('sst') >>> nfo.close() # or del nfo: close file descriptor

>>> f = cdms2.open(path) >>> nfo = NcFileObj(f) >>> nfo.f('sst') >>> del nfo # or nfo.close(): here has no effect (descriptor still open) >>> f.close() >>> nfo = NcFileObj(f) >>> nfo.close() # close file descriptor

-

class

NcIterBestEstimate(files, time=None, toffset=None, timeid=None, tslices=None, keepopen=False, autoclose=True, id=None)[source]¶ Bases:

objectIterator on netcdf forecast files

This class is useful for reading the best estimate of netcdf forcast files.

Params: - files: A list of netcdf files.

- toffset, optional: An integer or tuple of (<num>, ‘<units>’) to skip the first time steps of each files.

- timeid, optional: Time id. If

None, it is guessed usingguess_timeid(). - tslices, optional: A list of time slices (typically taken from a previous loop on file), to prevent guessing them.

- keepopen, optional: Keep all file descriptor open, else close those who can be closed once no longer used.

- autoclose: Deprecated.

Iterator: At each iteration, it returns

f,tslicef: the file descriptor (may be closed),tslice: the time slice- A

sliceinstance. Noneif not time is found (thus no slice to perform).False: if nothing to read at all.

- A

Example: >>> for f, tslice in NcIterBestEstimate(ncfiles, toffset=(1,'day')): ... if tslice is False or time is None: continue ... var = f(time=tslice)

-

class

Shapes(input, m=None, proj=False, inverse=False, clip=True, shapetype=None, min_area=None, sort=True, reverse=True, samp=1)[source]¶ Bases:

objectA class to read shapefiles and return GEOS objects Inspired from basemap.readshapefile

Here are the conversion rules from shapefile to GEOS objects :

- Points and multipoints are interpreted as

Points. - Polylines are interpreted as

LineString. - Polygons are interpreted as

Polygons.

Params: input: Refers to a shapefile or is a shapes isntance ; if a shapefile, it assumes that <input>.shp contains points, multipoints, lines or polygons, and that <input>.dbf contains their attributes.

**proj*, optional: A projection function to convert coordinates. It must accept the “inverse” keyword.

**m*, optional: A Basemap instance for converting for plotting.

inverse, optional: Inverset the conversion with proj .

**clip*, optional: If in the form

(xmin,ymin,xmax,ymax), clips to this box ; if a polygon like argument, it clips to this polygon (seepolygons()for arguments). If simplyTrueand m is present, it clips to the bounds of m.**min_area*, optional: Minimal area to keep a polygon

samp, optional: An integer to undersample coordinates of polygons and lines.

shapetype, optional:

- If 0, it must only deal with points ;

- if 1, only polylines ;

- if 2, only polygons (conversion 1<->2 is automatic).

-

INPUT_MULTIPOINTS= 8¶

-

INPUT_POINTS= 1¶

-

INPUT_POLYGONS= 5¶

-

INPUT_POLYLINES= 3¶

-

LINE= 1¶

-

LINES= 1¶

-

POINT= 0¶

-

POINTS= 0¶

-

POLY= 2¶

-

POLYGON= 2¶

-

POLYGONS= 2¶

-

POLYS= 2¶

-

clip(zone, copy=True, sort=True, reverse=True, **kwargs)[source]¶ Clip to zone

Params: - zone:

[xmin, ymin, xmax, ymax] - copy, optional: If

True, make a copy of current instance, else simply rehandled the list of shapes. - If

copy==True, Other parameters are passed to the initialization of the new instance.

- zone:

-

get_data(key=None, proj=None)[source]¶ Get the numeric version of the list of geos objects (polygons, etc)

Param: - key: A slice selector applied to the list.

- proj:

True, or a callable to project or re-project coordinates.

-

get_points(key=None, split=True, proj=None)[source]¶ Get all the points from all the shapes as a tuple (x,y)

-

get_shapes(key=None, proj=None)[source]¶ Get the list of geos objects (polygons, etc)

Param: - key: A slice selector applied to the list.

- proj:

True, or a callable to project or re-project coordinates.

-

plot(select=None, ax=None, fill=None, points=False, lines=True, fillcolor=None, color='k', s=None, linewidth=None, m=None, show=True, alpha=1, autoscale=True, title=None, **kwargs)[source]¶ Plot shapes

Params: - select, optional: argument for selecting shapes in the list [defaul: None].

- fill, optional: Force filling (True/False), else guessed from shpe type, ie filling for polygons only [default: None]

- ax, optional: Axes instance.

- m, optional:

Mapinstance (created withmap2()) or aBasemapinstance. - points, optional: Plots shapes as points.

- lines, optional: Plot shapes as lines (if a of type

POINTS). - fill_<params>, optional:

<param>is passed toPolyCollection. - lines_<params>, optional:

<param>is passed toLineCollectionor toPolyCollection. - points_<params>, optional:

<param>is passed toscatter. - m_<params>, optional:

<param>is passed tomap2ifm is True. - autoscale, optional: Autoscale axis limits?

-

resol(deg=True)[source]¶ Compute the mean “resolution” of the shapes based on the first shape

deg:

- if

False: return a resolution in meters has a the median distance between points - if

True: return the median distance between points as a resolution in degrees(xres,yres)

- if

-

sort(reverse=True)[source]¶ Sort shapes according to their surface or length

- reverse: If True, greater polygons are first [default: True]

-

xy¶ XY coordinates as a (2,npts) array

- Points and multipoints are interpreted as

-

class

XYZ(xyz, m=None, units=None, long_name=None, transp=True, trans=False, magnet=0, rsamp=0, id=None, **kwargs)[source]¶ Bases:

objectClass to manipulate xyz data (randomly spaced)

xyz: It can be either

- a .xyz ascii file, or a netcdf/grd file with variables

x,yandz, - a (x,y,z) tuple,

- a (3, npts) array,

- another XYZ instance.

- a .xyz ascii file, or a netcdf/grd file with variables

long_name: Long name

units Units

tranform: It can be either

- a factor applied to z at initialisation

- a fonction that takes z as the only argument to filter its data.

- exc: Polygons to exclude data (see

exclude()). Several polygons must be passed as a tuple (poly1, poly2, …).

- exc: Polygons to exclude data (see

- sel: Polygons to select data (see

select()). Several polygons must be passed as a tuple (poly1, poly2, …).

- sel: Polygons to select data (see

load_<keywords>: keywords are passed to

numpy.loadtxt()rsamp_<keywords>: keywords are passed to

rsamp()Other keywords are set as atrributes.

Slicing:

- len(obj): number of xyz data

- obj[1:3]: [(x0,y0,z0),(x1,y1,z1)]

Operations :

>>> xyz += 2 >>> xyz3 = xyz1 + xyz2/2. # concatenation

-

clip(zone=None, margin=None, inverse=False, mask=False, id=None, **kwargs)[source]¶ Geographical selection of part of the data

- zone: (xmin,ymin,xmax,ymax) or a float/int a complex polygon (see

polygons()). - margin: Margin around

zonerelative to the resolution (seeresol()) - inverse: Inverse the selection.

- mask:

zonemust be interpreted as a mask

- zone: (xmin,ymin,xmax,ymax) or a float/int a complex polygon (see

-

consolidate()[source]¶ Apply radius undersampling and all exclusions and selections to data and reset them

-

contains(x, y)[source]¶ Check if one or several points are within a the convex hull

- x,y: X,Y positions as floats or lists or an

numpyarrays.

- x,y: X,Y positions as floats or lists or an

-

del_rsamp()¶ Reset

rsampwithout affecting data

-

exclude(*zones)[source]¶ Add one or more zones where data are not used.

A zone can be :

- an argument to

polygons()to get a_geoslib.Polygoninstance, - another :class:XYZ` instance from which the convex hull (see

hull()) is used as a delimiting area

Usage: >>> xyz.exclude([[-8,43],[-5.5,43],[-6,45.]],[[-10,45],[-7,47],[-10,49.]]) >>> xyz.exclude(polygon1,polygon2) >>> xyz.exclude(xyz1,[-5,42,-3,48.])

See also

- an argument to

-

get_grid(res=None, xmin=None, xmax=None, ymin=None, ymax=None, relres=0.5, degres=False, id='xyz_grid')[source]¶ Generate a rectangular grid based on x/y positions and resolution

res: Resolution. It can be:

relres: Relative resolution factor applied to

reswhen resolution is guessed (res=None)degres: When

resis explicitly given, it interpreted as degrees isdegresis True.xmin,xmax,ymin,ymax: Bounds of the grid. If not specified, bounds of the dataset are used (see

xmin(), etc).

Note

Resolutions are adjusted when they are not mutiple of grid extensions (slightly decreased). Therefore, extensions of the grid are always preserved.

-

get_xyz(mask=True, split=False)[source]¶ Return coordinates and data as a (3, npts) array

xyzxy()[0]: Xxy()[1]: Yxy()[2]: Z

-

grid¶ Rectangular grid based on x/y positions and resolution

-

hull(out='xy', mask=True)[source]¶ Return the convex hull

Returns: Depends on out"xy": (xhull, yhull)"ind": indices of points"poly":_geoslib.Polygoninstance

-

interp(xyo, xyz=False, **kwargs)[source]¶ Interpolate to (xo,yo) positions using

nat.NatgridParams: Returns: An XYZ instance

-

magnet¶ Magnet integer attribute

-

plot(size=5.0, color=None, alpha=1.0, masked_alpha=0.3, masked_size=None, linewidth=0.0, show=True, savefig=None, savefigs=None, m=None, colorbar=True, title=None, units=None, cmap=None, mode='valid', zorder=100, masked_zorder=50, margin=2, xmin=None, xmax=None, ymin=None, ymax=None, xres=None, yres=None, **kwargs)[source]¶ Scatter plot of bathymetry points

Params: - mode, optional: ‘valid’, ‘masked’ or ‘both’.

- size, optional: Size of markers.

- color, optional: Color of markers.

- alpha, optional: Alpha transparency of markers.

- zorder, optional: zorder of markers.

- m, optional: Use this

Basemapinstance to plot the points. - masked_size, optional: Size of masked markers.

- masked_alpha, optional: Alpha transparency of masked markers.

- masked_zorder, optional: zorder of masked markers.

-

resol(convex_hull_method='delaunay', exc=[], deg=False)[source]¶ Return the mean resolution.

Algorithm: Median distances between facets of triangles

Returns: (xres,yres)

-

rsmap¶ Radius of unsersampling

-

save(xyzfile, **kwargs)[source]¶ Save to a file

xyzfile: Output file name

- write a netcdf file if it ends with “.nc” or “.grd”

- write a sinux file if it ends with “.snx”

- else write an ascii file with 3 columns

Other keywords are passed to

numpy.savetxt()for ascii saving

-

select(*zones)[source]¶ Add one or more zone (polygons) where only these data are used

A zone is an argument to

polygons()to get a_geoslib.Polygoninstance.Usage: >>> xyz.select([[-8,43],[-5.5,43],[-6,45.]],[[-10,45],[-7,47],[-10,49.]]) >>> xyz.select(polygon1,polygon2)

See also

-

set_magnet(magnet)[source]¶ Set the magnet integer attribute. If set to

0, no magnet effect.Note

Useful only for mixing

XYZinstances

-

set_res(xres, yres=None)[source]¶ Set the resolution of the dataset

If

yresis not, it is set toxres. When a value is negative, it is supposed to be in meters (not in degrees)

-

shadows()[source]¶ Get the polygons defining the ‘shadow’ of this dataset.

It consists of a tuple of two elements:

- the convex hull as a polygon,

- a list of exclusion polygons that intersect the convex hull.

Therefore, a point in the shadow must be inside the convex hull polygon, and outside the exclusion polygons.

Returns: (hull_poly, [exclusion_poly1,…])

-

tocfg(cfg, section, param=None)[source]¶ Dump one or all parameters as options to a cfg section

- cfg: ConfigParser object

- section: Section of cfg

- param: A single or a list of parameter names

-

togrid(grid=None, mask=False, cgrid=False, **kwargs)[source]¶ Interpolate to a regular grid

grid: The output grid. It can be either:

- a (x,y) tuple or a grid or a

MV2variable with a grid, None, thus guessed usinggrid()

- a (x,y) tuple or a grid or a

mask: It can be either:

mask_<param>: <param> is passed to

polygon_mask()for evaluation of mask thanks to the polygons.grid_<param>: <param> is passed to

grid().cgrid: If

True, returns bathy at U- and V-points, else at T-pointsOther keyparam are passed to

griddata()for regridding.

Return:

(Zx,Zy)ORZdepending on cgrid.

-

toxy(xo, yo, mask=None, outtype='tuple')[source]¶ Interpolate on random points using

xy2xy()xo,yo: Output positions

mask: It can be either:

outtype: Define output type

"tuple": as a tuple (x, y, z)"xyz": as xyz block"XYZ": as anXYZ(or subclass) instance

-

transp¶ Transparency boolean attribute

-

x¶ Valid X positions

-

xmax¶ X max

-

xmin¶ X min

-

xy¶ Coordinates as a (2, npts) array

-

xyz¶ Coordinates and data as a (3, npts) array

-

y¶ Valid Y positions

-

ymax¶ Y max

-

ymin¶ Y min

-

z¶ Valid Z values

-

zmax¶ Z max

-

zmin¶ Z min

-

class

XYZMerger(*datasets, **kwargs)[source]¶ Bases:

objectMix different bathymetries

-

plot(color=None, marker=None, mode='cluster', title='XYZ merger', show=True, colorbar=True, savefig=None, savefigs=None, legend=True, xmin=None, xmax=None, ymin=None, ymax=None, margin=5, xres=None, yres=None, **kwargs)[source]¶ alpha: Alpha transparency:

- applied to all points if

mode="cluster" - applied to hidden points if

mode="data"

- applied to all points if

mode: Display mode:

"cluster": Points from different datasets have different colors and markers,- and hidden points are transparent.

"data": Points have the same marker, colors depends on Z value and hidden- points are masked.

marker: Define a single or several markers to be used.

legend: Show a legend if

mode="cluster".title: Title of the plot.

m:

Basemapinstance.m_margin: Margin for

m, relative to the mean resolution (seeXYZ.resol())m_<keywords>: Keywords are passed to

map().Extra keywords are passed to

XYZ.plot().

-

xyz¶ Coordinates and data as a (3, npts) array

-

-

grib_get_names(gribfile)[source]¶ Return a list of a grib file parameter unique names (using grib message’s shortName).

-

grib_read_files(filepattern, varname, time=None, select=None, torect=None, samp=None, grid=None, squeeze=None, atts=None, verbose=False, **kwargs)[source]¶ Read cdms2 variables through one or a set of grib files.

Examples: >>> vardict = grib_read_files("r0_2010-%m-%d_00.grb", 'u', ('2010-08-10', '2010-08-15', 'cc'), samp=[2, 1, 3]) >>> vardict = grib_read_files("r0_2010-??-??_00.grb", dict(shortName:'u'), select=dict(lon=(-10.0,-5.0), lat=slice(100,200)), grid=smallgrid) >>> vardict = grib_read_files("myfiles*.grb", [dict(shortName=['u', 'u10']), dict(shortName=['v','v10'])])

Params: - filepattern: must be:

- File pattern. See

list_forecast_files()for more information. - One or more string(s) of the files(s) to be processed. string(s) may contain wildcard characters.

- File pattern. See

- varname: Name of the grib variable(s) to read.

- If a simple name, it reads this variable using the grib message’s shortName.

- If a list of names, it reads them all.

If a name is a dict, then it is used as grib selector in which case the user should not specify selectors which may interfer with the select keyword (see

select()).

time, optional: Time selector for files and data. This keyword is mandatory if

filepatternhas date patterns.select, optional: An additional selector applied after data have been loaded. It can be a dictionary or a

Selectorinstance (seecreate_selector()).torect, optional: If True, try to convert output grid to rectanguar using

curv2rect()(seencread_var()).samp, optional: Undersample rate as a list of the same size as the rank of the variable. Set values to 0, 1 for no undersampling.

grid, optional: A grid to regrid the variable on.

grid_<keyword>, optional:

keywordis passed toregrid().squeeze, optional: Argument passed to

ncread_var()to squeeze out singleton axes.atts: attributes dict (or list of attributes dict for each varname)

- verbose: function to be called for logging (sys.stderr if True,

disabled with False)

Return: If varname is a list of names or dicts: - a dict of loaded variables as

cdms2.tvariable.TransientVariablethis dict keys are are filled with the corresponding varname value if it is a string, or wiht the loaded message’s shortName/name/parameterName.

Else: - the loaded variable as

cdms2.tvariable.TransientVariable

-

list_forecast_files(filepattern, time=None, check=True, nopat=False, patfreq=None, patfmtfunc=None, patmargin=None, verbose=False, sort=True)[source]¶ Get a list of forecast files according to a file pattern

Params: filepattern: It can be either:

- a global matching pattern (

"file??.nc"), - a date pattern (

"file%Y-%m-%d.nc"), - an url (

"http://site.net/file.nc"), - a list of files.

- a global matching pattern (

time: A time selector (

('2000', '2001', 'co')).Warning

This argument is mandatory if

filepatternis a date pattern, and not used iffilepatternis of another type.check, optional: Check if local files exist.

nopat, optional: Never consider that input patterns have date patterns.

- patfreq, optional: Frequency of files to generate file names for each date

when

filepatternis a date pattern.

- patfmtfunc, optional: Function to use in place of

strftime()to generate file names. It must take as arguments a date pattern and a CDAT component time.

sort, optional: If True, files are sorted alphabetically after being listed; if a callable function, they are sorted using this function (

files=sort(files)).Warning

Files are sorted alphabetically by default!

Examples: >>> 'Prefered way' >>> list_forecast_files('mrsPRVMR_r0_%Y-%m-%d_00.nc', ('2010-08-06', '2010-08-15')) >>> list_forecast_files('http://www.ifremer.fr/data/mrsPRVMR_r0_%Y-%m-%d_00.nc', ('2010-08-06', '2010-08-15')) >>> list_forecast_files('mrsPRVMR_r0_%Y-%m-%d_*.nc', ('2010-08-06', '2010-08-15'))

>>> 'Possible way' >>> list_forecast_files('mrsPRVMR_r0_2010-05-??_00.nc') >>> list_forecast_files(['mrsPRVMR_r0_2010-05-??_00.nc', 'mrsPRVMR_r0_2010-05-??_60.nc'])

>>> 'Just ot filter in existing files' >>> list_forecast_files(['mrsPRVMR_r0_2010-05-06_00.nc', 'mrsPRVMR_r0_2010-05-07_00.nc'])

>>> 'Simple conversion to list' >>> list_forecast_files('http://www.ifremer.fr/data/mrsPRVMR_r0_2010-05-06_00.nc')

-

nccache_get_time(f, timeid=None, ro=False)[source]¶ Get a time axis from cache or netcdf file

A time axis not in cache is read using

ncget_time(), them stored in cache.Params: - f: File object or name.

- timeid, optional: Single or list of time ids for

ncget_time().

Example:

>>> taxis = nccache_get_time('myfile.nc', ['time','time_counter'])

-

ncfind_axis(f, specs, ignorecase=True, regexp=False, **kwargs)[source]¶ Find an axis in a netcdf file using

ncfind_obj()

-

ncfind_obj(f, specs, ignorecase=True, regexp=False, ids=None, searchmode=None, **kwargs)[source]¶ Find a variable or an axis in netcdf file using a name, list of names or matching attributes such as standard_name, long_name and units.

Objects are checked using

ncmatch_obj(). It first checks the standard_name, then the names (ids), the axis, and finally the long_names and units.Example: >>> f = cdms2.open('temp.nc') >>> ncfind_obj(f, 'temp') >>> ncfind_obj(f, ['temperature','temp']) >>> ncfind_obj(f, ('temperature','TEMP'), ignorecase=False) >>> ncfind_obj(f, dict(standard_name="sea_surface_temperature")) >>> ncfind_obj(f, 'lon')

Params: - f: A cdms2.dataset.CdmsFile.

- name: A string or list of string to look for, or a dictionary with keys “name”, “standard_name”, “long_name”, ‘units’ and ‘axis’.

- ignorecase, optional: Ignore name case when searching variable.

- regexp, optional: Interpret long_names and units as regular expressions that must be compiled.

- searchmode, optional: Search order when

specsis a dictionary and not a OrderedDict. It defaults toNoneor'snlua'which means: standard_name -> name -> long_name -> units -> axis (first letters). Ifnameis an OrderedDict, it simply acts as a filter to restrict search.

Return: The first matching object name, or None if not found.

-

ncfind_var(f, id, ignorecase=True, regexp=False, **kwargs)[source]¶ Find a variable in a netcdf file using

ncfind_obj()

-

ncget_axis(f, checker, ids=None, ro=False, checkaxis=False, **kwargs)[source]¶ Get an axis in a netcdf file by searching all axes and variables

If

checkeris a list, dict or tuple,ncfind_axis()is called directly to search for the axis within the file.Param: checker: Can be either

- A generic name such as ‘x’ or ‘lon’,

- A function to check that an object is an axis.

of appropriate type (such as

islon()). This function must accept the ‘ro’ keyword (‘readonly’). - An argument to

ncfind_axis(): list, dict, tuple.

ids, optional: A list of ids to focus search.

Return: The axis or None if not found

-

ncget_fgrid(f, gg)[source]¶ Get the file grid that matches a transient grid or variable

Matching is checked using ids of longitudes and latitudes.

Params: - f: file name or object.

- gg: cdms2 grid or variable with a grid.

Return: A

FileGridinstance orNone

-

ncget_grid(f, ids=None, torect=False)[source]¶ Get a grid of a netcdf file

Params: - f: Netcdf file name or object.

- ids, optional: List of ids to help searching.

-

ncget_lat(f, ids=None, checkaxis=False, ro=False)[source]¶ Get latitude axis of a netcdf file

Params: - f: Netcdf file name or object.

- ids, optional: List of ids to help searching.

-

ncget_level(f, ids=None, checkaxis=False, ro=False)[source]¶ Get level axis of a netcdf file

Params: - f: Netcdf file name or object.

- ids, optional: List of ids to help searching.

-

ncget_lon(f, ids=None, checkaxis=False, ro=False)[source]¶ Get longitude axis of a netcdf file

Params: - f: Netcdf file name or object.

- ids, optional: List of ids to help searching.

-

ncget_time(f, ids=None, checkaxis=False, ro=False)[source]¶ Get time axis of a netcdf file

Params: - f: Netcdf file name or object.

- ids, optional: List of ids to help searching.

-

ncget_var(f, *args, **kwargs)[source]¶ Get a variable object as returned by

cdms2.dataset.CdmsFile.getVariable()which is equivalent tof[varname].Return: A cdms2.fvariable.FileVariable or None if not found. See: ncfind_var()

-

ncmatch_obj(obj, id=None, standard_name=None, long_name=None, units=None, axis=None, ignorecase=True, searchmode=None, **kwargs)[source]¶ Check if an MV2 object (typicaly from a netcdf file) matches names, standard_names, etc

It first checks the standard_name, then the names (ids), the axis, and finally the long_names and units.

Params: - obj: A MV2 array.

- standard_name, optional: List of possible standard_names.

- id, optional: Name (id) of this array, wich defaults to the id attribute.

- axis, optional: Axis type, as one of ‘x, ‘y’, ‘z’, ‘t’.

- long_name, optional: List of possible long_names or callable expression (such as regular expression method).

- units, optional: Same as

long_namesbut for units.

Example: >>> ncmatch_obj(sst, standard_name='sea_surface_temperature', id=['sst']) >>> import re >>> ncmatch_obj(sst, long_name=re.compile('sea surface temp').match)

-

ncread_axis(f, name, select=None, ignorecase=True, mode='raise')[source]¶ Read a 1D axis

Note

Please use

ncread_var()to read 2D axes.Params: - mode, optional: if

'raise'raises anIOErrorif not found, else returnsNone.

- mode, optional: if

-

ncread_best_estimate(filepattern, varname, *args, **kwargs)[source]¶ Read the best estimate of a variable through a set of netcdf forecast files

Warning

This function is deprecated. Please use

ncread_files()switching the first two argument.This is equivalent to:

ncread_files(varname, filepattern, *args, **kwargs)

-

ncread_files(filepattern, varname, time=None, timeid=None, toffset=None, select=None, atts=None, samp=None, grid=None, verbose=False, ignorecase=True, torect=True, squeeze=False, searchmode=None, nibeid=None, sort=True, nopat=False, patfreq=None, patfmtfunc=None, check=True, bestestimate=True, **kwargs)[source]¶ Read the best estimate of a variable through a set of netcdf files

Warning

Files are listed using function

list_forecast_files(). Please read its documentation before using current function.Examples: >>> var = ncread_files("r0_2010-%m-%d_00.nc", 'xe', ('2010-08-10', '2010-08-15', 'cc'), samp=[2, 1, 3]) >>> var = ncread_files("http://www.net/r0_2010-%m-%d_00.nc", 'xe', ('2010-08-10', '2010-08-15', 'cc'), timeid='TIME', toffset=(1, 'day')) >>> var = ncread_files("r0_2010-??-??_00.nc", 'xe', select=dict(lon=(-10,-5), z=slice(23,24)), grid=smallgrid) >>> xe, sst = ncread_files("myfiles*.nc", [('xe', 'sla'),('sst','temp'),'u'])

Params: varname: Name of the netcdf variable to read.

- If a simple name, it reads this variable.

- If a list of names, it reads them all.

- If a list of list of names, each variable is searched for using the sublist of names.

filepattern: File pattern. See

list_forecast_files()for more information.time, optional: Time selector. This keyword is mandatory if

filepatternhas date patterns.toffset: Skip the first time steps. See

NcIterBestEstimatefor more information.select, optional: An additional selector for reading the variable. It can be a dictionary or a

Selectorinstance (seecreate_selector()).atts: attributes dict (or list of attributes dict for each varname) (see

ncread_var().)samp, optional: Undersample rate as a list of the same size as the rank of the variable. Set values to 0, 1 for no undersampling.

grid, optional: A grid to regrid the variable on.

grid_<keyword>, optional:

keywordis passed toregrid().timeid, optional: Time id (otherwise it is guessed).

ignorecase, optional: Ignore variable name case (see

ncfind_var()).torect, optional: If True, try to convert output grid to rectanguar using

curv2rect()(seencread_var()).Extra kwargs are used to refine the selector initialized with

select.squeeze, optional: Argument passed to

ncread_var()to squeeze out singleton axes.searchmode, optional: Search order (see

ncfind_obj()).sort/nopat/patfreq/patfmtfunc/check, optional: These arguments are passed to

list_forecast_files().

Raise: NcIterBestEstimateErrorin case of error.

-

ncread_var(f, vname, *args, **kwargs)[source]¶ Read a variable in a netcdf file and some more

In addition to a simple

f(vname, *args, **kwargs)`:vnamecan be a list of var names, and it takes the first one found, ignoring the case by default.- If a variable is on a grid that is stored as curvilinear but is rectangular in real, it convert its grid to a rectanguar grid

If variabe is not found, it raises

Params: - f: File descriptor.

- vname: Variable name(s) (see

ncfind_var()). - ignorecase, optional: Case insensitive search for the name of variable.

- Other arguments and keywords are passed to

f. - atts: Dictionary of attributes to apply.

- squeeze, optional: A single argument (or a list of them) interpreted

as a squeeze specification passed to

squeeze_variable(), to squeeze out singleton axes. - torect, optional: If True, try to convert output grid to rectanguar

using

curv2rect(). - mode, optional: if

'raise'raises anIOErrorif not found, else returnsNone.

Example: >>> var = ncread_var(f, ['u', 'u2'], lon=(45, 47), atts={'id':'U'})